In a move that could significantly reshape the mobile AI landscape, Google has officially released an open-source app that allows users to run AI models locally on their smartphones—with no internet connection required.

This powerful new tool is free, offline-capable, and fully open-source. Best of all, it pairs seamlessly with Google’s latest Gemma 3n open-source multimodal AI models, delivering an efficient, self-contained AI experience directly on Android devices.

What Is the Google AI Edge Gallery App?

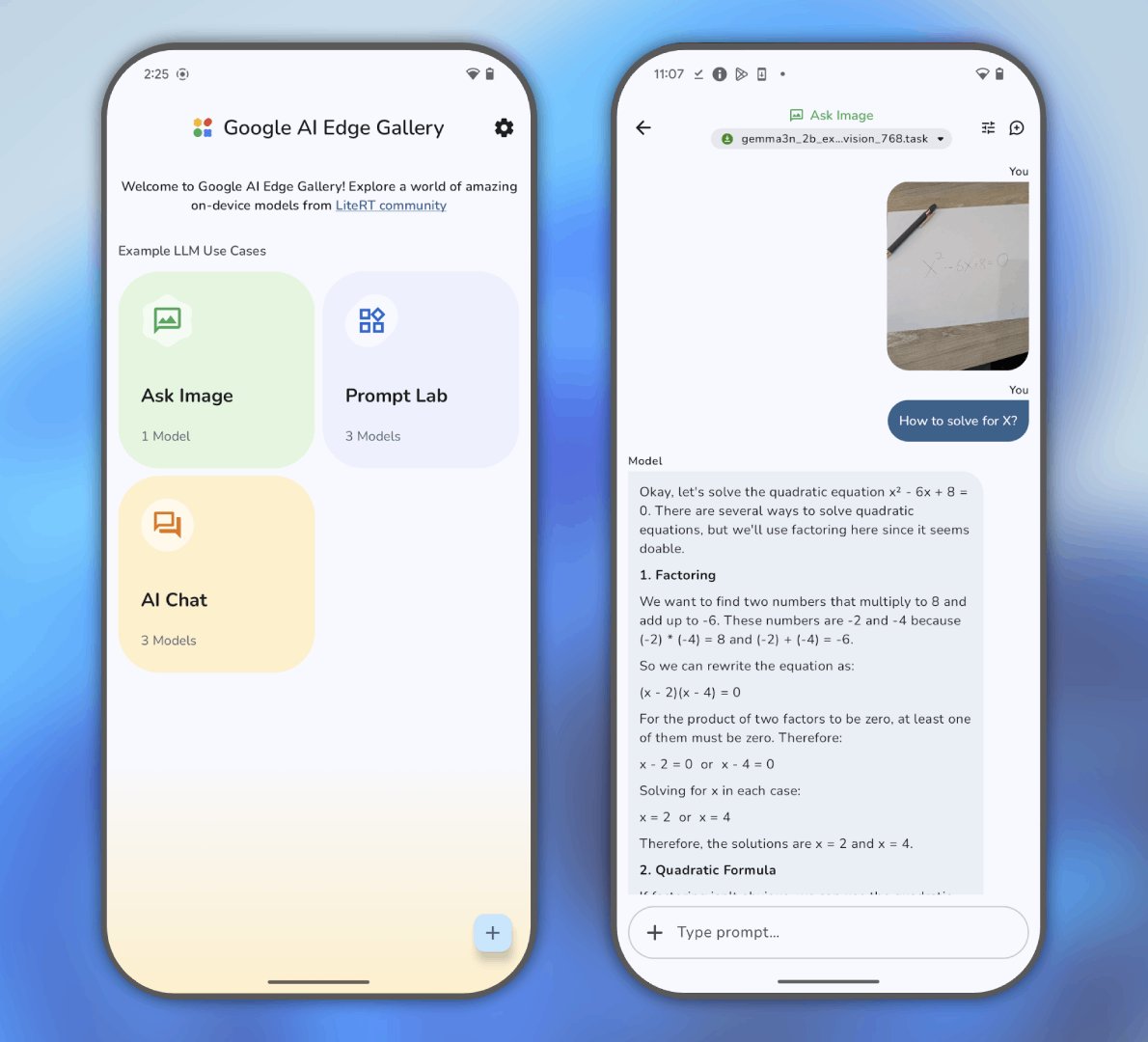

The app called Google AI Edge Gallery is an experimental open-source project aimed at developers and advanced users who want to experiment with on-device AI model inference.

Unlike most AI apps that require cloud access, this one runs completely on your phone, offering:

- Full offline functionality

- Multimodal support (images, text, etc.)

- Zero usage fees

- Custom model integration

Although it’s still in alpha and not available on the Play Store, it’s fully accessible via GitHub for Android users, with an iOS version on the way.

Key Features

Once installed, the app gives users access to three core modules:

- Ask Image – Use vision-based prompts to interact with image data

- Prompt Lab – Customize and experiment with your own prompts or AI tasks

- AI Chat – A local chatbot experience that runs entirely on-device

Each section comes with pre-configured templates, but users can also import their own models to personalize the experience.

Once downloaded, everything functions 100% locally—no server calls, no data uploads.

How to Get Started

- Visit the GitHub Repository:

Head to the official Google AI Edge Gallery GitHub repo. - Download the APK:

Under the Releases section, download the latest.apkfile and install it manually on your Android device. (Make sure to allow installations from unknown sources.) - Explore and Customize:

Open the app and explore the templates in each module—or bring in your own Gemma-compatible models.

Transparency & Documentation

Despite some initial skepticism and misinformation online, the app is officially documented by Google and targeted toward developers rather than general users. That’s why it’s distributed via GitHub instead of the Play Store.

Google has detailed documentation for using large language models (LLMs) on Android via the MediaPipe framework, which includes instructions and sample applications:

Final Thoughts

Google’s release of the AI Edge Gallery is a game-changer for mobile AI development. It’s a lightweight, powerful tool that brings cutting-edge models like Gemma 3n to your pocket — without needing to rely on the cloud.

Whether you’re an AI hobbyist, a mobile developer, or just curious about the future of local AI, this tool offers a fast, private, and open path to experimentation.

Download it. Explore it. Build the future of AI on your own phone.

Follow us for more Updates